The race to regulate AI is promulgating an array of approaches from light-touch to highly prescriptive regimes. Governments, industry, and standards-setting bodies are rushing to weigh-in on what guardrails exist for AI developers and deployers. Yet, often times the regulatory conversations take for granted the answer to one fundamental question: What is AI?

At its core, AI inspires a belief that a machine or system can mimic a human’s cognitive abilities. But how does that belief translate into a defined concept that can guide policymakers? Moreover, how should businesses think about what constitutes AI when those charged with writing the rules or setting the standards lack agreement?

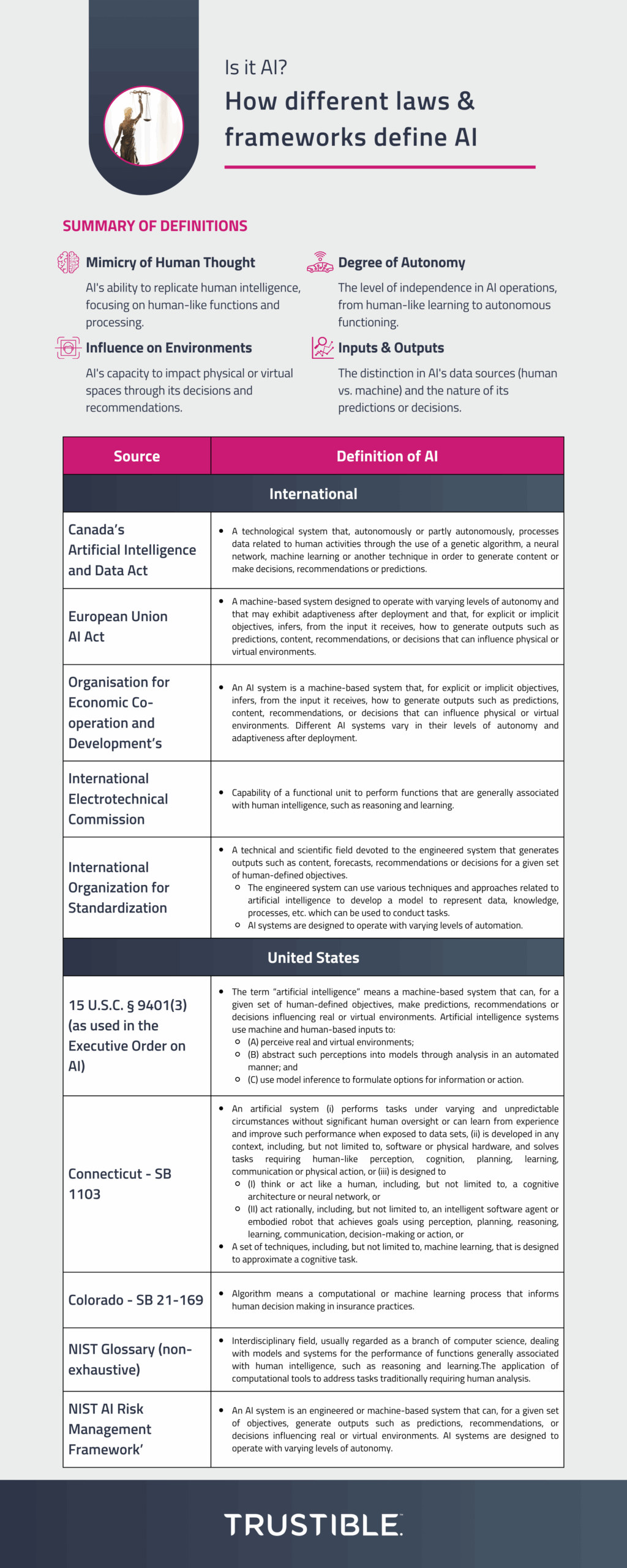

AI definitions across legislation and standard-setting bodies tend to raise four key themes: (i) the ability for the system to mimic human thought; (ii) the degree to which the system will influence environments; (iii) the sources of the system’s inputs and nature of its outputs; and (iv) the system’s degree of autonomy. These themes underscore how the spectrum of existing tools (i.e., algorithms, models, rules-based systems, and statistical programs) generally fall into the bucket of AI, but may fall short of being AI from a compliance perspective. In order to see the practical scope of various AI definitions, each theme will consider how the language would apply to the following use cases:

- an algorithm that recommends products based on the user’s prior purchases and browsing history;

- a generative AI system, such as ChatGPT; and

- a risk scoring tool that weighs a person’s behaviors and activities (e.g., daily amount of exercise) to produce a risk score that is used as a factor for insuring the person.

One crucial thought to be mindful of in this discussion is the interchangeable use of the terms algorithm, automated decision making system, AI, and machine learning. In some instances those terms are used to describe distinct computational functions, whereas other times they are used to capture the same meaning. While this discussion does not provide an in-depth description of how these terms differ or overlap, it is important to note that they have been used interchangeably to cover complex computational functions.

Beyond analyzing the distinctions across AI definitions, businesses should think about their own concept of AI. Some companies may have an internal definition for AI that guides their work in the space, while others may be in the early stages of establishing a definition. Regardless of where a business sits on its understanding of AI, it is important for it to understand how regulators and standards-setting bodies comprehend AI as they set out rules and benchmarks for the technology.

Mimicry of Human Thought

Technical definitions of AI tend to focus on the machine’s ability to process information or perform functions similar to humans. For instance, AI definitions from the International Electrotechnical Commission and the National Institute of Standards and Technology’s (NIST’s) AI Risk Management Playbook glossary emphasize a machine’s ability to replicate human intelligence. Interestingly, Connecticut’s law governing state use of AI (SB 1103) also uses an AI definition that emphasizes a system’s human-like abilities.

These definitions are less concerned with the system’s inputs or outputs. Moreover, the language seems to narrow the scope AI because it focuses on the system’s human-like function. Arguably, ChatGPT (or similar Generative AI chatbots) is the only system that aligns with this aspect of the definitions because the interaction between the user and system is human-like. Additionally, ChatGPT can learn from its interaction with the user and provide more refined outputs.

Conversely, AI definitions written by some governmental bodies emphasize input data, the system’s intended purpose, and the resulting outputs. These definitions downplay the more abstract “human-like” functions of AI systems while putting a premium on the impact of the system. For example, the Organisation for Economic Co-operation and Development’s (OECD’s) recently updated AI definition includes what input is inferred by the machine, how the system is used (i.e., to make predictions, recommendations, or decisions), and how the system’s use influences real or virtual environments. This is similar to the U.S. federal government’s definition of AI, which is used in President Biden’s Executive Order on AI, as well as International Organization for Standardization’s (ISO) AI definition.

This language may cast a wider net of what counts as AI because it focuses on the system’s impact, which may be easier to measure as opposed to the system’s ability to mimic human functions. For instance, the recommendation system, ChatGPT, and the risk score system would all fall within the scope of this language.

The differing language should signal to businesses that they need to identify the intended goals of their systems. Clearly defining the system’s objectives can help determine whether it is meant to supplant or support human decision making. While some laws are less concerned with the human capabilities of AI, laws like SB 1103 may be more restrictive on its view of AI when human-like function and output is not its intended goal.

Influence on Environments

Several AI definitions touch on the concept of how the machine can influence a given environment (i.e., physical or virtual). The proposed final legislative text of the European Union (EU) AI Act’s definition of AI (modeled after the OECD’s definition) is concerned with systems that “generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.” This is similar to the definition of AI used by the U.S. federal government and in the NIST AI Risk Management Framework. However, the idea of a system outputs influencing an environment is not clear cut. The various definitions using this language miss the degree to which the output influences the environment. For instance, the product recommendation algorithm may not influence a real environment because the user can ignore the recommendations.

In some instances, an AI definition does address the system’s degree of influence, like in Colorado’s law restricting an insurers’ use of external consumer data and the U.S. federal government’s AI definition. Both definitions consider how the output informs a person’s decisions or actions. The product recommendation system and the risk scoring system would influence because both provide outputs that inform a decision or action. ChatGPT may also exhibit this type of influence, but that would depend on the user’s intended goal.

The challenge for businesses is to understand how these definitions align with the purpose of their AI systems. To the degree that a company’s system provides information to an individual or is tasked with identifying an issue within their network, raises an issue as to whether the system is influencing its environment.

Inputs and Outputs

An AI system requires an input (i.e., data) with an expectation of some output. Interestingly, the range of AI definitions offer differing perspectives on a system’s inputs and outputs.

First, there is a lack of consensus about input sources. The U.S. federal government’s AI definition is concerned with inputs generated from both human and machines, whereas the EU AI Act and Canada’s proposed Artificial Intelligence and Data Act (AIDA) do not focus on those distinctions. In some instances, AI definitions exclude inputs altogether, like the NIST AI Risk Management Framework and SB 1103.

The distinction matters because, where input sources are not defined, other aspects of the definition are necessary to understand its scope. For instance, the product recommendation system relies on both input from user (i.e. browsing history) and from a machine (i.e., virtually stored purchase logs). The system aligns with the defined input sources found in the EU AI Act, but the same could not be said about the NIST AI Risk Management Framework AI definition because that aspect does not exist.

Second, the various AI definitions generally agree that system outputs are concerned with predictions, recommendations, or decisions. However, some definitions are only concerned with those specific outputs, whereas other definitions leave the outputs list open-ended. For example, the AIDA is only concerned with systems that “generate content or make decisions, recommendations or predictions,” where as the outputs list under the EU AI Act and ISO is more open-ended.

The risk scoring system presents an interesting dilemma because of its output. The final risk score may offer a prediction because it tries to numerically express how much of an insurance hazard a given person poses to the insurance company. However, the risk score could also be more like a snapshot in time about a person’s current lifestyle then a forecast about future behavior. While the spirit of the laws may attempt to capture risk scoring systems as AI, the definitions could create a loophole for certain systems.

Businesses will need to be mindful of their input sources and the types of output generated. Companies should document their input sources (i.e., human or machine generated) and purpose (i.e., noting whether the input is related to a human activity). Businesses should also be aware of the intended system outputs because some definitions vary on the types of expected outputs.

Degree of Autonomy

AI systems generally function with some degree of autonomy. Various AI definitions capture this aspect of AI without firmly defining the system’s level of autonomy, like in the ADIA, the EU AI Act, and by ISO. The functions for all three previously mentioned use cases would fall within these definition’s spectrum of autonomy. However, SB 1103 draws a slightly firmer line on autonomy, which generally ties back to the system mimicking human-like abilities (e.g., learning from experience and improving performance based on its inputs). While an outlier among other AI definitions, setting a firmer line on the degree of autonomy narrows the scope of AI. The risk scoring system may not raise to the level of autonomy entertained by the SB 1103 definition. Likewise, the product recommendation system may not operate with enough autonomy depending on whether the system is learning and improving from its data sources.

Businesses will need to consider how the degree of autonomy for their systems can be impacted by existing and future laws. While the general consensus appears to avoid defining AI based on a certain degree of autonomy, there is at least one instance where the system’s degree of autonomy matters and the issue could arise in other regulatory frameworks.

What’s Next?

The rush to rein in AI raises numerous questions about mitigating harms and maximizing benefits. Yet, often times the discussion overlooks how the technology itself is defined. As more rules and frameworks are proposed and operationalized, it is important to think about how they will align and diverge from existing AI definitions. Businesses should consider how these definitions will impact their own use cases and systems. Moreover, companies should begin to document various functions of their systems to understand how they fit into the ever-growing spectrum of what constitutes AI. If companies are considering how to align their internal understanding of AI with existing, internal definitions, they may want to err on the side of cautious and adopt a more inclusive meaning.