The speed at which new general purpose AI (GPAI) models are being developed is making it difficult for organizations to select which model to use for a given AI use case. While a model’s performance on task benchmarks, deployment model, and cost are primarily used, other factors, including the data sources, ethical design decisions, and regulatory risks of a model must be accounted for as well. These considerations cannot be inferred from a model’s performance on a benchmark, but are necessary to understand whether using a specific model is appropriate for a given task or legal to use within a jurisdiction.

Information about a model’s data sources, design choices, and other processes are not consistently disclosed by model developers. When it is, it is oftentimes presented in a highly technical format. This creates a barrier for non-technical stakeholders involved with AI governance to get answers to critical questions.

In recognition of this, the final version of the EU AI Act sets disclosure requirements for general purpose AI models. These GPAI requirements are expected to come into effect by mid-2025. While these requirements primarily apply to the developers of GPAI models, organizations that use a non-compliant model within the EU for a ‘high risk’ AI use case, may risk facing consequences for non-compliance.

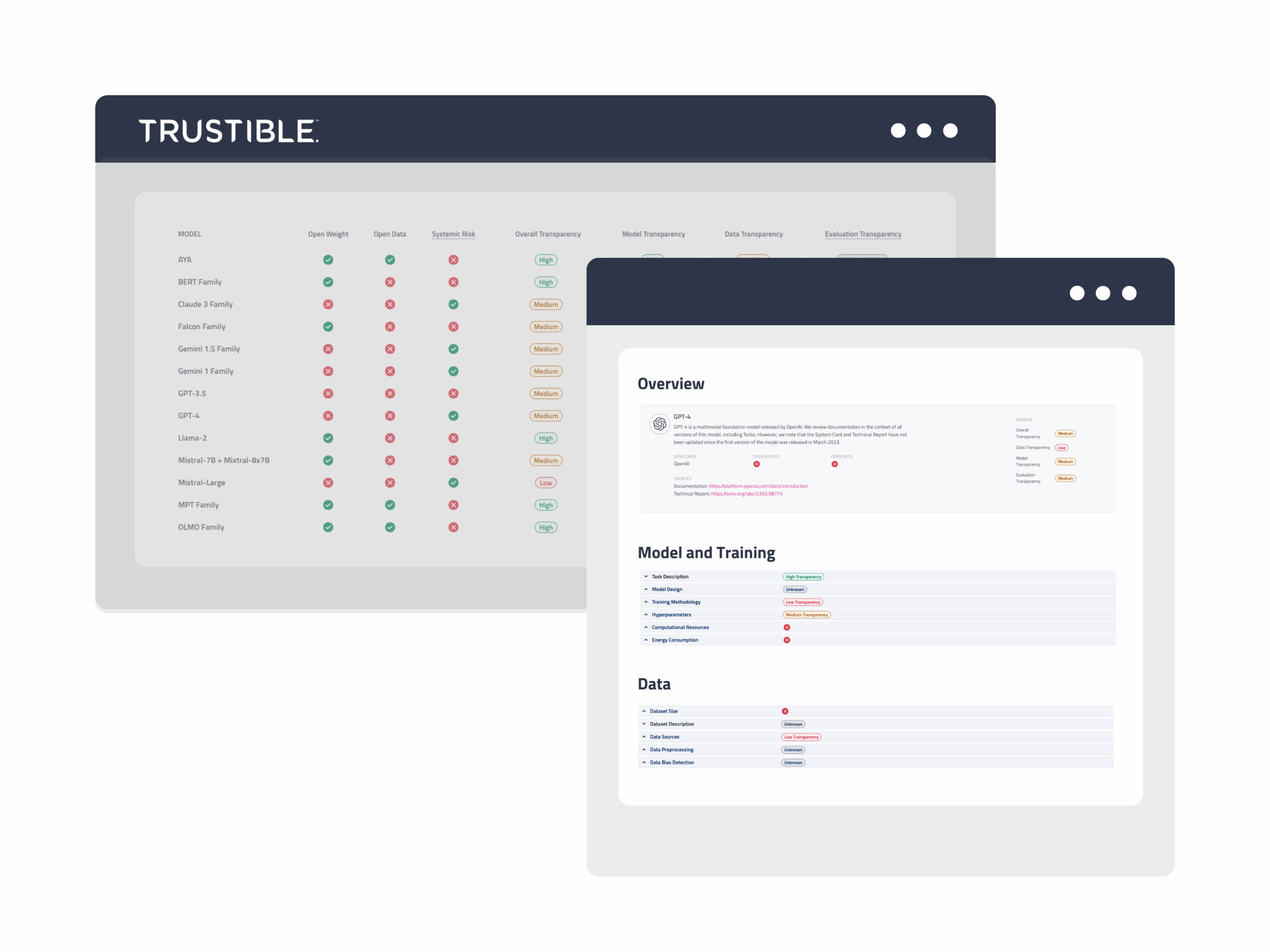

To assist our customers with assessing this risk, Trustible is excited to announce our Model Transparency Ratings. Our internal AI and Policy teams analyzed the top 21 GPAI Large Language Models (or model families) based on the disclosure criteria required under the EU AI Act. Our ratings are based solely on the publicly available documentation disclosed by model providers on their website, Github, HuggingFace, or other official publications. These ratings aim to equip AI governance professionals with key information about AI systems in an accessible format, and to help teams make more responsible and informed decisions taking model ethical and legal risks into account. We intend to update our ratings as new information becomes available by the model providers. Our ratings take inspiration from other forms of transparency rating reports such as financial credit ratings or ESG scores and do not constitute legal advice.

Evaluation Methodology

To assemble our criteria, Trustible analyzed the final text of the EU AI Act, with a focus on Articles 53 and 55, as well as Annexes XI and XII, which outlines the obligations of GPAI providers. In addition to the text of the act, Trustible’s experts reviewed applicable research on model transparency standards and best practices, including work on datasheets, model cards, and system cards.

In addition to the core requirements for GPAI models, the AI Act also defines an additional category called ‘General Purpose AI Models with Systemic Risk’. The exact regulatory criteria for which models fall into the ‘systemic risk’ category will be clarified over the coming months. However, an initial total training compute threshold of 10^25 FLOPS was set in addition to language about most ‘state of the art’ models falling into this category. Models with ‘systemic risk’ have additional requirements under the AI Act, including additional model evaluations, and impact assessments. While not an official definition, Trustible’s experts categorize the largest, highest performing models – including GPT-4, Claude 3 Opus, Gemini 1.5, and Mistral-Large – as ‘systemic risk’ models for the purposes of our transparency ratings. Trustible will update ratings as the EU AI Office, or model developers, officially designate systemic risk models.

In addition to the ‘system risk’ models, the EU AI Act also has a carve out for truly ‘open’ models. While open source models are technically exempt from the GPAI requirements, this is based on the assumption that the system is sufficiently well documented to convey its potential risks. In addition, the text of the Act does not clearly define what ‘open source’ AI is, and there is significant debate in the AI research community about this. Our own analysis found that, while many models are ‘open source’ with the ability to download and use the model weights freely, there is little information about the training datasets used to train the system. Given that many risks of a model stem from what data is used, we opted to keep all open source models in our ratings and annotate which models provide downloadable datasets compared to only downloadable model weights.

Based on the text of the EU AI Act, Trustible identified 30 criteria to assess readiness with the EU AI Act. The criterion was either a binary evaluation or evaluated on a 4-point scale (Unknown, Low Transparency, Medium Transparency, High Transparency). This was done to capture the nuances of the AI Act’s requirements, as well as better communicate to our customers when insufficient information was being provided to properly understand the risks of a particular system. Requirements such as posting the ‘Acceptable Use Policy’ of a model were easy to determine on a binary yes/no scale. Other requirements, such as ‘Training Data Sources’ disclosure, were more open-ended and the EU has not clearly determined the criteria for what is ‘sufficient’ for compliance. We based our 4 point scale on whether a model deployer would have enough information to identify whether it was appropriate to use for a given task. Our baseline maps to the ‘Medium Transparency’ threshold in most instances, with ‘High Transparency’ being awarded when documentation shares details beyond what is required by the AI act, and by established best practices.

After establishing our criteria, we then identified the most commonly used LLMs, as well as those available on leading Cloud Service Providers. Our goal is to cover any models that organizations may deploy for production use cases either through direct integration, or as the base model for fine-tuning. Many models offer several versions/variants, each fine-tuned towards a specific task, such as a ‘chat-bot’, or trained with a different number of parameters. Many of these variants released at the same time shared core documentation and their transparency ratings were often identical, so for the initial version of our ratings, we grouped many of these variants into model ‘families’. We intend to expand on model variants in the future.

In addition to grouping things by model families, some of the LLMs we identified, such as Cohere for AI’s AYA model, were fine-tuned versions of pre-existing models. This highlights a general challenge with model documentation, disclosures, and provenance. Trustible’s platform itself supports ‘recursive’ model cards to account for this. However, for the purposes of our ratings, we sought to only evaluate the transparency of the specific layer that we were analyzing. In the example of AYA, we only evaluated the transparency by Cohere, and not the transparency of the mT5 model by Google that it’s based on. Our goal is to create ratings of these other base models, and then be able to combine a ‘parent’ model’s ratings with their ‘child’ ratings. We plan on explaining this objective in future work.

To evaluate each model, Trustible looked at the following sources:

- The creator’s website;

- Press releases;

- Public Github repositories;

- HuggingFace model pages; and

- Technical papers linked to those sources.

We sought to only analyze first party documentation by the model creators themselves, even when the model was available on multiple platforms. After an initial read through of the documentation, we then iterated through our transparency criteria and assigned it the label we felt was most appropriate, as well as a short summary justifying the rating labels. We also noted the exact source that led us to assign the score for each criteria. After assembling all of the scores for each section, we then gave an overall rating based on the number of criteria that received either a ‘Yes’ label for binary criteria, or at least a ‘Medium Transparency’.

Results & Analysis

In our initial ratings release, we analyzed 21 model families, focusing only on LLMs announced or released before May 2024. Our main focus was on LLMs hosted on Microsoft Azure, AWS Bedrock, Google Vertex, as well as those used in production use cases from our partners. We included both open source models, as well as proprietary models. Our ratings reflect the state of public model documentation between late-Feb and mid-March 2024, and our EU AI Act was based on the final text of the act passed by the EU Parliament on March 13, 2024.

For purposes of analysis, we grouped our criteria into 3 general categories: Model Transparency, Data Transparency, and Evaluation Transparency, and then weighted them together to assign an Overall Transparency label. Our ‘evaluation transparency’ is reserved for ‘system risk’ models and reflects their transparency with the increased obligations for the largest models which includes impact assessments, and red-teaming. Standard model evaluation approaches such as automated benchmarks, internal testing, and other validation techniques were included in ‘model transparency’ criteria.

Open Source Models

Perhaps unsurprisingly, on average, open source models (as defined by at least having open model weights) were generally more transparent than proprietary models. No open model weight model received less than a ‘Medium’ score on ‘Overall Transparency’. In addition, many models that also shared, or pointed to, their datasets also took the time to analyze those datasets in depth, and almost all of the ‘Open Data’ models received a ‘High’ label for Data Transparency, with the exception of Vicuna.

Proprietary Models

Many of the proprietary models that can only be accessed through APIs, or similar cloud deployment models, were generally less transparent in their documentation. In particular, many models from top AI companies like OpenAI, Anthropic, and Google disclosed little information about their model architecture. Our criteria is not based on the expectation of these models to disclose trade secrets, but on the model design, compute usage, and parameter size requirements of the AI Act. Documentation from proprietary models often blended technical reports with marketing materials comparing benchmark performance only to a select group of direct competitors.

Systemic Risk Models

The EU AI Act imposes additional transparency requirements for models with “systemic risk”. These include a detailed discussion of Evaluation Strategies and of Adversarial Testing/Safety Measures. Most of the providers of the largest models, do already publish relevant documentation. Even OpenAI, which is not transparent about the Model and Data for GPT-4, provides an extensive system card that details evaluation and safety testing. It’s worth noting that in our ratings effort, we identified 2 sections of systemic risk evaluation requirements, testing on the basis of public evaluation protocols, and documentation on system architecture that were poorly defined. The EU’s newly created AI Office will be responsible for clarifying these requirements. Regarding the lack of standards for the system architecture, it’s worth noting that all suspected systemic risk models were only available via an API, and therefore additional software components may sit between the user and the actual model, but we found no discussion of these components in any documentation.

Trends

Non-US based Models

Our analysis included several model families created by companies outside of the US. This included the Mistral (France), Yi (China), Falcon (UAE), and AYA and Command R (Canada) models. While there was a trend for these models to be open source, it’s possible that trend may break over time. Mistral’s earlier models were highly transparent, however their latest model, Mistral-Large, provides limited information and received our lowest Overall Transparency score.

Data Transparency

The top overall trend we saw was the lack of transparency about data sources, and related model training methodology. Many of the the most recent model releases in our ratings, including Claude 3, Mistral-Large, and Gemini 1.5 received our lowest ratings, and disclosed almost no details about their data sources, pre-training processing, quality assessments, or dataset distributional characteristics. In addition to the most recent models, all of the models we predict meet the description of a ‘systemic risk’ model received our lowest rating on data transparency. An understanding of what data sources were used to train a model is one of the most important pieces of information for assessing whether a model is appropriate for a given task, and from a risk management perspective, transparency about this is more critical than model transparency. Only two of the models studied, OLMO and Aya, had a full public dataset with a clearly documented preprocessing procedure. The next most transparent model was MPT, with a clearly documented dataset and pre-processing procedure, but without a released copy of the final dataset. Knowing what dataset was used to train a model is essential to know whether it’s appropriate to use for specific tasks; for example, disclosing what languages were included, or whether niche information like medical academic literature was collected. Many organizations currently ‘probe’ LLMs in order to infer this, but there remains risks in the unknown.

Limitations

Our analysis was done purely on the publicly accessible documentation shared for each model. We acknowledge that model providers may be willing to share additional information confidentially. However, we found no publicly specified processes, contact information, or forms for doing so.

Our initial focus was on Large Language Models (LLMs) largely due to their high level adoption by customers and our teams’ familiarity with text/language models. Many image, video, and audio models may also be considered ‘GPAI’ models, and each can have their own forms of biases and risks. We aim to evaluate these in the future.

Several of our evaluation criteria were evaluated on a 4-point scale (Unknown, Low, Medium, High), and while we drafted clear annotation criteria for each level, we acknowledge some degree of subjectivity.

We aligned our analysis with the General Purpose AI requirements of the EU AI Act. Our analysis is not meant to provide a legal interpretation of the EU AI Act or the requirements for General Purpose AI. It is our opinion that a ‘Medium’ transparency rating would suffice the requirements under the EU AI Act. However, our assignment of a ‘Medium’ or ‘High’ transparency rating is meant to qualify the level of disclosure across models and does not mean that a model is compliant under any regulatory regime.

Using the Ratings & Future Work

Our transparency ratings are intended to help our customers make more informed decisions about which model to use for any given use case. Our process aims to translate the content and quality of highly technical documentation into clear business, legal, and risk considerations that a wide range of users can interpret. We offer our ratings directly in the Trustible platform, and we fully disclose our evaluation criteria, our summary of the results, and the exact sections of the EU AI Act we based our criteria on.

We intend to expand on these ratings to help our customers navigate the growing complexity of AI governance. We plan to keep our model ratings up to date, add new models as they’re announced, and extend the scope of what our ratings cover. Our goal is to help our customers understand what benchmarks were used to run a given model against, whether those benchmarks are significant or not, and what relationship they have on the appropriateness of a model for a given task.

If you have comments, questions, or feedback about our model ratings, or about Trustible in general, please contact us at: [email protected]