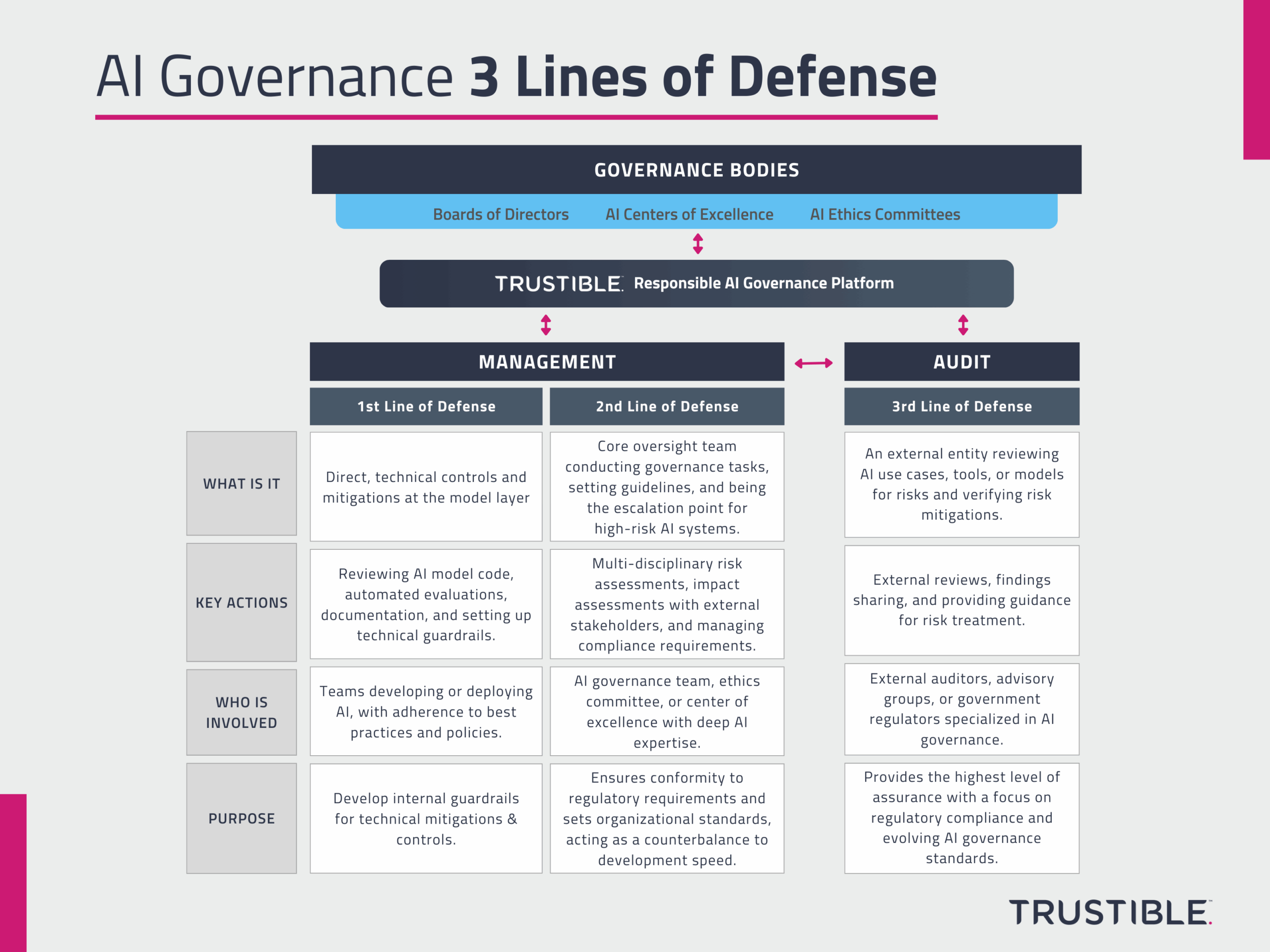

AI Governance is a complex task as it involves multiple teams across an organization, working to understand and evaluate the risks of dozens of AI use cases, and managing highly complex models with deep supply chains. On top of the organizational and technical complexity, AI can be used for a wide range of purposes, some of which are relatively safe (e.g. email spam filter), while others pose serious risks (e.g. medical recommendation system). Organizations want to be responsible with their AI use, but struggle to balance innovation and adoption of AI for low risk uses, with oversight and risk management for high risk uses. To manage this, organizations need to adopt a multi-tiered governance approach in order to allow for easy, safe experimentation from development teams, with clear escalation points for riskier uses.

In this post we describe 3 lines of defense that organizations can adopt to ensure good AI governance. These lines of defense largely map to model risk management requirements present in the financial services industry, with some modifications and adjustments.

First Line – Developers & Deployers

The first line of defense comes in the form of direct, technical controls and mitigations set up at the model layer itself. These may take the form of processes that AI developers follow, such as reviewing AI model code, writing automated model evaluations, documenting key information about the system or creating input and output filters on top of AI models. They may also take the form of technical guardrails built into systems, or firewalls to capture and prevent sensitive information going in, or out, of models.

The key distinguishing element of the first line of defense is that all the controls and mitigations are put in place by the teams developing, or deploying AI for their organizations. There may be tools that strictly enforce these first line efforts, or they may be enforced through best practices and policies with non-technical enforcement mechanisms.

The first line of defense is sufficient for many organizations, especially those without complex regulatory requirements around their use of AI, however for highly regulated organizations or those needing to demonstrate compliance attestation to various stakeholders, gaps in the first line of defense may be covered by having additional oversight.

Second Line – Oversight Team & Center of Excellence

The second line of defense comes in the form of a core oversight team and the oversight tasks they conduct. This team is empowered to help set organizational AI governance guidelines, and is the first point of escalation for high risk AI systems. Whether in the form of a dedicated AI governance team, AI ethics committee, or center of excellence, this team has deep AI expertise and is also charged with ensuring AI use across the organization conforms to internal and external standards. These teams are equipped to conduct formal multi-disciplinary risk assessments, engage external stakeholders to conduct impact assessments, and manage key regulatory compliance requirements such as post-market monitoring activities.

One element that makes this function an essential second line is that its members are not directly responsible for pushing out products, or implementing vendor tools and so can deliberately serve as a counterweight to development teams who may be incentivized to deploy things quickly. The goal of this team is less to be a ‘department of NO’, but rather help build a responsible AI roadmap and sign off on its deployment. Many of the activities of this function will be to analyze AI use cases and models from more than just the business perspective and account for the growing complexities of AI systems.

This team, and its oversight activities, can ensure that an organization conforms to regulatory requirements across multiple frameworks and set standards throughout the organization. However, since they are part of the same organization as the developers, they cannot provide the highest level of assurance, and they may not be equipped to stay on top of all the rapidly evolving research in the AI space. Enter the third line of defense.

Third Line – Audit

The third line of defense introduces a formal audit process applied to AI Governance. This may be done by a dedicated internal audit team, but for high risk uses, may require an external audit entity instead. In more extreme cases, the audit may be done by a government regulatory body, or other third party audit group. The audit team may focus on either organizational or technical practices, or both, and will review specific AI use cases, tools, or models for potential risks, and verify that the organization has an appropriate amount of risk mitigations in place. They will share their findings with the development team and may provide additional guidance for risk treatment. The key differentiator to this audit group compared to the oversight group in the second level is that the audit group will not have the same incentives, nor reporting structure, as the oversight team.

Having a dedicated audit function shifts many of the incentives involved and also allows for the external entities to be hyper specialized in AI governance matters. While many organizations may not want the additional bureaucracy, having an externally verified AI system can provide the highest level of assurance on an AI system.

Which lines of defense are necessary?

Not every organization will require all 3 lines of defense in a formal sense. Many smaller companies may leverage their senior AI/ML leaders to act as the second line. This is in place of a dedicated function or team overseeing this. These smaller organizations may still leverage the 3rd line of defense in order to get certified against emerging responsible AI standards such as ISO 42001.

As many companies grow in their AI maturity, and the regulatory environment becomes more complex, the number of controls in the first line of defense will likely increase, and it will be more difficult to rely on a small group of AI experts to cover all enterprise risks. At this point, a more formal oversight function or role may be necessary. Many larger technology companies have established dedicated responsible AI teams, or ethical AI working groups to achieve this.

The other key consideration for how many lines of defense an organization requires is the sector they are working in. Many emerging AI regulations such as the EU AI Act focus compliance requirements heavily on specific applications of AI, such as using AI for financial, educational, or medical purposes. The higher regulatory bar for these AI applications can often only be achieved with sufficient oversight and resources dedicated to AI governance, and many emerging frameworks mandate audits or assessments of some kind.

How Trustible helps

The Trustible platform is perfectly suited to help scope out what first line defensive practices are necessary for compliance, be the source of truth and main workspace for the second line of defense, and act as the audit preparation platform and access gateway for external audits for the third line.

While Trustible at this time does not modify or assess directly models, implement access controls, or create model firewalls, Trustible does provide in-depth insights into recommended first line defensive controls based on the use case. Knowing what the appropriate policies and controls are is half the battle, and Trustible comes pre-configured with recommended policy templates, risk taxonomies, and model risk ratings to help organizations build out their desired risk control set from the very beginning of a project. Our vision to integrate with the entire ecosystem of AI/ML tools will enable more first-line of defense capabilities over time.

For oversight functions, Trustible acts as the platform that connects the technical first line defenders with the oversight team members. A key challenge at this layer is appropriately translating technical limitations into their business or legal implications and vice versa. Trustible provides regulatory policy insights, risk assessment tools, and guided and structured documentation fields to facilitate the cross-team workflows and make sure key requirements are being verified.

When it comes time for an audit, Trustible can help organizations automatically generate compliance artifacts, map them to relevant audit criteria, and provide paper trails for key approvals and assessments. Auditors can directly access audit evidence in the Trustible platform, and engage in dialogue with the auditees. Trustible aims to make the audit process as seamless and automated as possible for both auditees, and auditors.