Key Takeaways

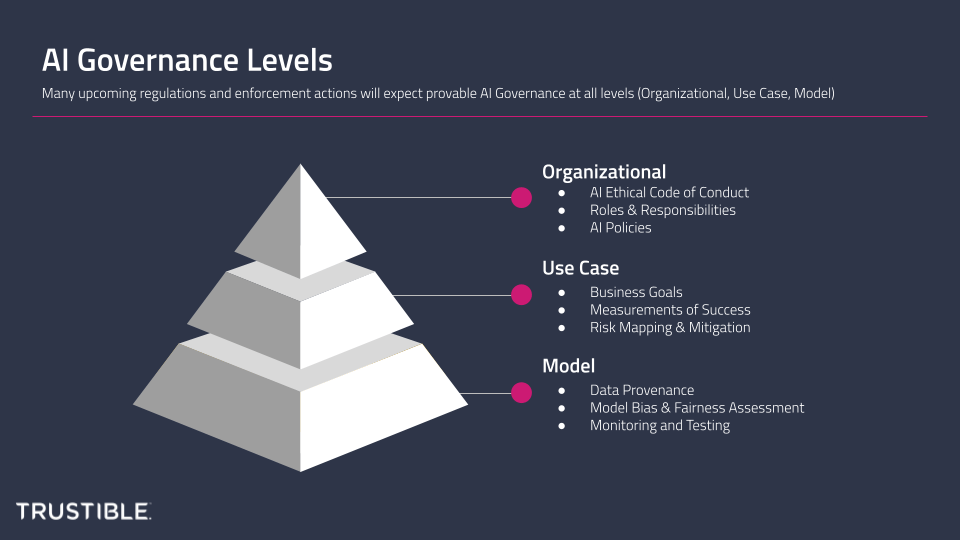

- AI Governance isn’t all just about the ML models. Organizational and use case-level governance are critical in adopting Responsible AI practices

- Many upcoming regulations and enforcement actions will expect provable AI Governance at all levels (Organizational, Use Case, Model)

- Organizations can start now building out their Organizational governance policies and processes to prepare for upcoming regulations

While AI has been used in enterprise and consumer products for decades, only large tech organizations with sufficient resources were able to implement it at scale. In the past few years, advances in the quality and accessibility of ML systems have led to a rapid proliferation of AI tools in everyday life. The accessibility of these tools means there is a massive need for good AI Governance both by AI providers (e.g. OpenAI), as well as the organizations implementing and deploying AI systems into their own products.

However, “AI Governance” is a holistic term that can mean a lot of different things to different groups. This blog post seeks to provide some clarity on 3 different ‘levels’ of AI Governance: Organizational, Use Case, and Model. We will describe what they are, who is primarily responsible for them, and why it’s important for an organization to have strong tools, policies and cultural norms for all 3 as part of their broader AI Governance Program. We’ll also discuss how upcoming regulations will intersect with each different level.

Organizational Level Governance

At this level, AI Governance takes the form of ensuring there are clearly defined internal policies for AI ethics, accountability, safety, etc, as well as clear processes to enforce these policies. For example, to comply with many of the emerging AI regulatory frameworks, it will be important to have a clear process for deciding when and where to deploy AI. This process could be as simple as ‘the tech leadership team needs to approve the AI system’, or as complex as a formal submission process to an AI ethics committee that has go/no-go power over AI uses. Good organizational AI Governance for this means ensuring that that processes are clear, accessible to internal stakeholders, and regularly enforced.

The NIST AI Risk Management Framework does a good job our laying out all the various organizational governance policies and processes that should be in place in their ‘GOVERN’ pillar (Link), and the EU AI Act outlines various organizational requirements in Articles 9, 14, and 17 (Link) (although this may still be updated before final passage). The organizational governance standards and policies should be drafted by senior leaders at the organization in consultation with legal teams, which will ultimately be accountable for implementing and enforcing the policies. The organizational policies will largely define when and where an organization will choose to deploy AI, as well as determine what kinds of documentation and governance considerations must be made at the use case level.

Use Case Level Governance

Use Case Level Governance is mostly focused on ensuring that a specific application of AI, for a specific set of tasks, meets all necessary governance standards. The risks of harms coming from AI are highly context specific. A single model can be used for multiple different use cases (especially foundational or general purpose models such as GPT-4), with some use cases being relatively low risk (ex: summarizing movie reviews), while a very similar task with different context being very high risk (ex: summarizing medical files). Alongside the risks, many of the ethical questions for AI, including simply whether to use AI for a task, are tied to context.

Governance at this level means ensuring that organizations are carefully documenting the goals of using AI for the task, justifications for why AI is appropriate, as well as what the context-specific risks are and how they are being mitigated (both technically and non-technically). This documentation should conform to the standards set out in the organizational governance policies as well as any of the regulatory standards. Both the NIST AI RMF Framework (MAP function) and EU AIA (Annex IV) outline the types of ‘use case level’ documentation that should be generated and tracked. Governance at this level will be owned by many different business units. The project leads (often product managers), in consultation with legal/compliance teams, will be the primary group responsible for ensuring proper governance processes are followed and documented at this level. The risks and business goals defined at this level largely then inform what the model level governance expectations should be for given use case.

Model Level Governance

Model Level Governance is heavily focused on ensuring that the actual technical function of an AI system is meeting expected standards of fairness, accuracy and security. It includes assuring that data privacy is protected, that there are no statistical biases between protected groups, that the model can handle unexpected inputs, that there is monitoring in place for model drift, etc. For the purposes of this framing of AI Governance, the model level also includes the data governance standards applied to the training data. Best practices and standards for model governance have already emerged in specific sectors often under the guise of ‘model risk management’, and many great tools have been developed to help organizations identify, measure, and mitigate model level risks.

This is the governance level that many technical AI/ML teams primarily focus on as they are ultimately responsible for ensuring that the technical underpinnings of an ML system are fundamentally sound and reliable. With the notable exception of the NYC Local Law 144, most other AI focused regulations do not prescribe exact model level metrics or standards. For example, the ‘MEASURE’ function of the NIST framework outlines what model governance should look like without being prescriptive about exact metrics or outputs. It expects an organization to have clearly defined their own testing, validation, and monitoring strategies, and to prove those strategies are enforced. Different sectors will likely develop exact metrics and standards for specific uses of AI over time, and these will be determined either by industry trade associations, or by sector specific regulators such as the FDA.

Why this matters – AI Audits

Many thought leaders and regulators have proposed AI Audits as a governance mechanism to help enforce various legal requirements. However, there isn’t necessarily clear agreements on what an AI Audit is, or what levels of governance it will be auditing. For example, the NYC Local Law 144 targeting automated employment decision systems primarily requires an audit at the model level with specific requirements about a model’s performance and outputs. In contrast, the EU’s AI Act requires a conformity assessment, a type of AI Audit, which focuses heavily on ensuring that specific documentation and processes are in place at the use case and organizational level, and isn’t particularly prescriptive about what model level requirements should be met. That will be left up to each sector, or each business to decide for themselves and demonstrate to regulators.

The EU’s approach is similar to other proposed regulatory frameworks in several US states (including DC and California), as well as in the proposed federal Algorithmic Accountability Act which all require impact assessments (another form of an AI Audit) with particular focus on the use case level and ensuring that there is appropriate documentation, transparency, and monitoring set up for entire AI systems, while not directly proscribing model level requirements.

Why this matters – Regulatory Enforcement

Many of the broad proposed regulations, such as the EU AI Act, focus heavily on the organizational and use case governance levels. This focus is intuitive, as model level requirements can be very difficult to define without hampering innovation, and can only be done for very specific use cases and types of AI systems and the legislative process will be too slow to keep up. In addition, many legislators and regulators are too unfamiliar with the ‘lower level’ nuances of AI systems, but feel more comfortable writing laws about expected organizational processes and societal outcomes. This familiarity will also likely carry over to how regulators approach enforcing AI focused laws. Enforcing laws at the model level will be difficult for many regulators even with the best hired consultants, but obvious flaws or gaps at the use case or organizational level will make for an easier enforcement action. Furthermore, many organizations are increasingly leveraging third-party AI/ML systems and may not be owning the model level governance, but would still need use case and organizational governance in place. Organizations cannot make ‘AI Governance’ just the exclusive responsibility of AI/ML teams, and instead need to ensure that organizational and use case processes and documentation are bullet proof and fully auditable in order to protect themselves from regulatory action.

Final Thoughts – We strongly believe that building accurate, reliable, and unbiased ML models and practicing good data governance is essential for trustworthy and responsible AI systems. Every organization needs to have tools and processes in place to ensure their models and 3rd party AI systems are tested well and actively monitored. However, many of the ‘stakeholders’ for overall AI Governance, including regulators and the general public, will not have the skills to understand or assess AI Governance practices at the model level, and so it’s important to understand the different levels of AI Governance abstraction those stakeholders will be looking at.