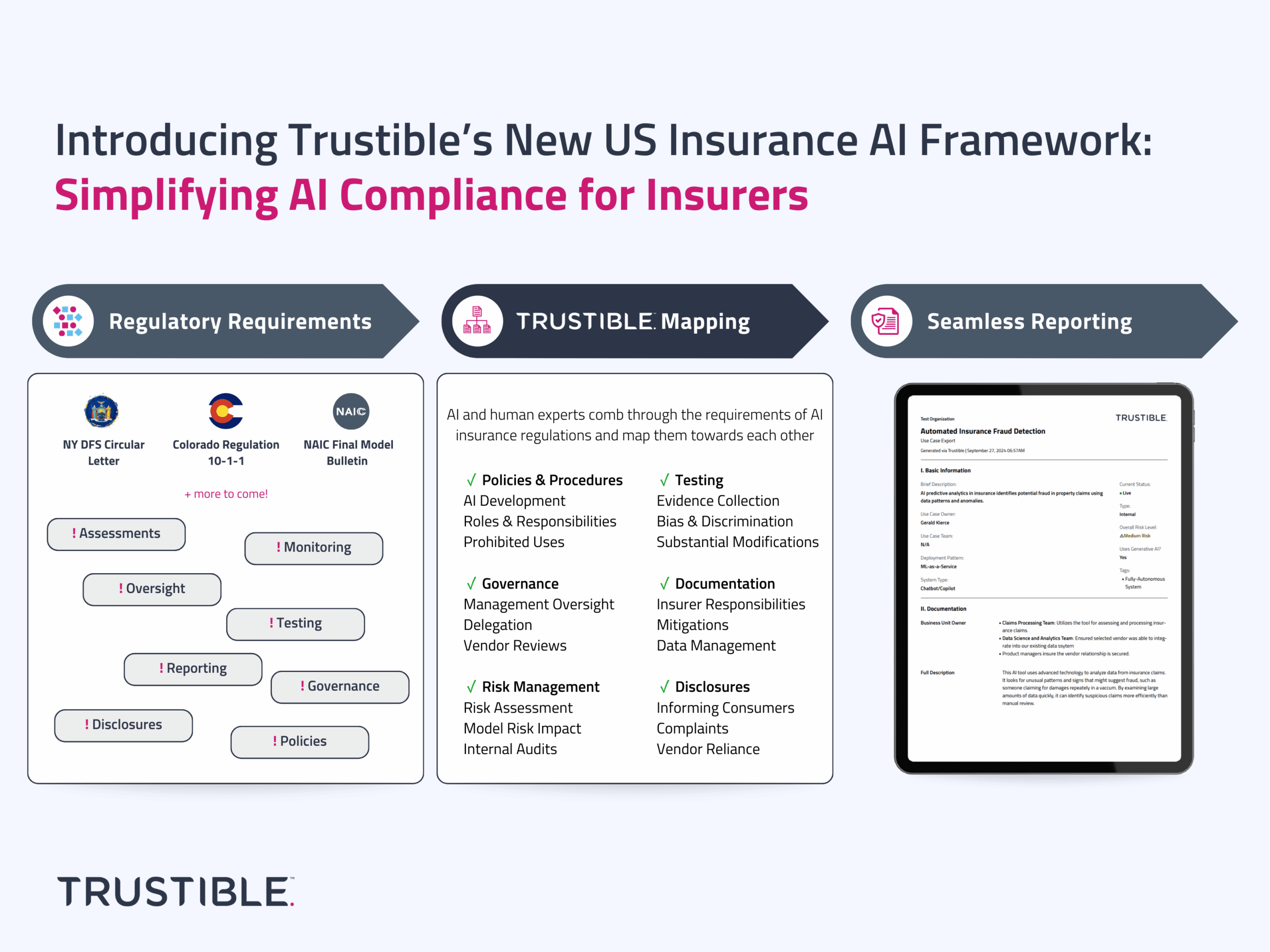

At Trustible, we understand the challenges insurers face in navigating the evolving AI regulatory landscape, particularly at the state-level in the U.S. That’s why we’re excited to introduce the Trustible US Insurance AI Framework, designed to streamline compliance by synthesizing the latest insurance AI regulations into one comprehensive, easy-to-comply with framework embedded in our platform.

Navigating a Complex Regulatory Landscape

Insurance regulators across the country are looking to ensure that AI technologies are used responsibly and don’t introduce unfair biases in decision-making. Regulators are focusing on issues like preventing discriminatory practices in AI models and requiring insurers to establish robust risk management programs. As a result, insurers must now navigate a complex web of compliance requirements across multiple states, each with its own set of rules governing the use of AI in underwriting, pricing, and other critical processes.

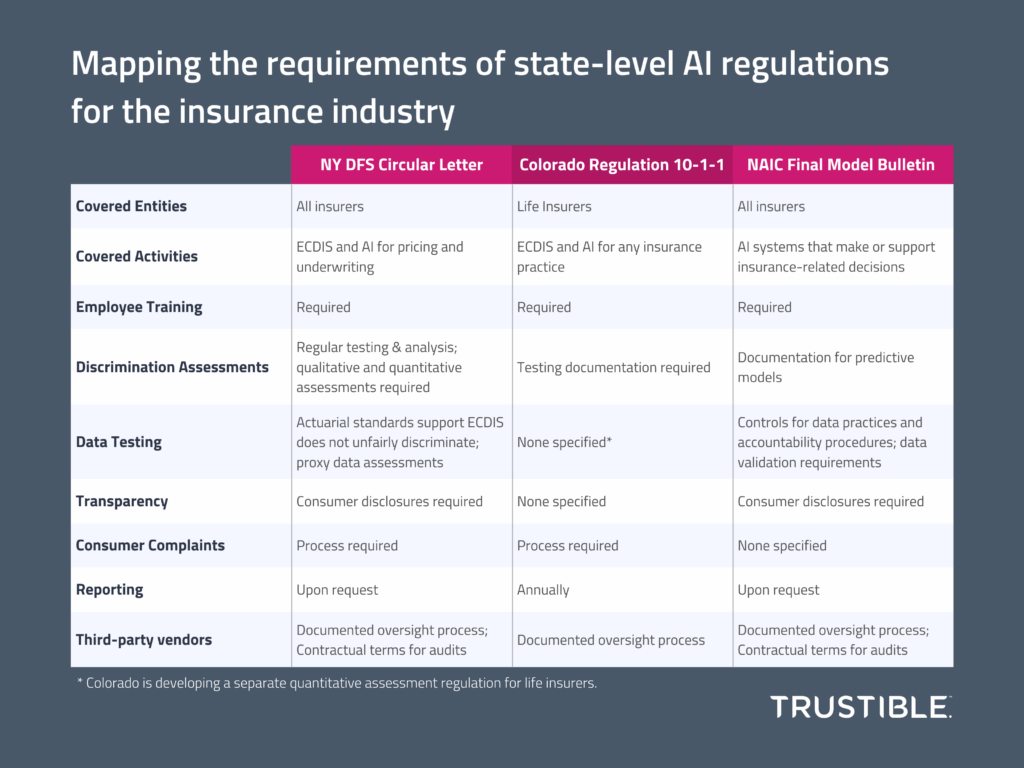

- Colorado Life Insurance Regulation (September 2023): Requires life insurers using external consumer data and information sources (ECDIS) to establish risk management programs that prevent unfair discrimination. Insurers must submit compliance reports to the Colorado Division of Insurance.

- New York Department of Financial Services (NY DFS) Circular Letter No. 7 (July 2024): Offers guidance on the use of ECDIS, AI, and predictive models in underwriting and pricing. Although not a formal regulation, this guidance sets important expectations for AI use in insurance.

- National Association of Insurance Commissioners Final Bulletin (December 2024): A model bulletin adopted by 17 states so far, advising insurers on responsible AI use, governance, and oversight.

This regulatory patchwork creates challenges for insurers, who must ensure that their AI systems are compliant across state lines while avoiding risks such as bias and discrimination. At Trustible, we’ve developed a solution to simplify this legal complexity.

Trustible’s New US Insurance AI Framework

Our new US Insurance AI Framework brings clarity to this tangled web of rules and regulations, empowering insurers to meet compliance standards more efficiently. Here’s how our platform helps:

1. Simplified Language for Complex Regulations

Insurance regulations can often be dense. Trustible translates these complex requirements into plain, actionable language. By doing so, we help insurers understand their obligations more efficiently, saving valuable time and resources. Our platform makes compliance accessible, ensuring stakeholders can grasp the requirements of each regulation, from legal teams to data scientists.

2. A Structured, Comprehensive Approach

Trustible’s framework provides a structured approach that helps insurers organize their compliance efforts at every level—whether it’s at the organizational, use case, or individual AI model level. The platform creates a clear compliance checklist, covering essential processes and procedures, as well as ensuring all aspects of AI governance are considered.

By cross-mapping regulatory requirements, Trustible enables insurers to see how different state regulations align and where they diverge. This mapping ensures that insurers can confidently comply with the various regulatory standards all at once – reducing duplicative efforts and avoiding compliance gaps.

3. Practical, Actionable Guidance

Regulations often outline what needs to be done, but not how to do it. Our framework offers practical guidance through guiding questions and tools that help insurers implement real-world compliance measures. This hands-on approach provides clarity and direction, empowering insurers to put policies into practice without getting bogged down by theoretical guidelines. For instance, insurers can quickly set up risk management programs that align with Colorado’s requirements while ensuring that they’re adhering to New York’s predictive modeling guidance.

4. Streamlining Documentation & Regulatory Reporting

One of the most time-consuming aspects of regulatory compliance is maintaining up-to-date documentation and submitting regulatory reports. Trustible simplifies this process by creating pre-populated templates and documentation workflows. Insurers can now generate the necessary compliance reports quickly, ensuring they meet deadlines without the usual administrative burden.

Why Trustible?

With our US Insurance AI Framework, Trustible offers insurers a one-stop solution for AI governance and regulatory compliance. By integrating key insurance regulations into a single platform, we help organizations manage the risks of AI adoption and maintain responsible AI practices—no matter where they operate. Our framework is built to evolve with the regulations, ensuring your organization is always up to date and ready for what’s next in AI governance.